Exploring Emergency Savings As An Employee Benefit

Due to confidentiality agreements, I can not show any real deliverables that were used at Vanguard. All deliverables shown are examples using dummy information. These are intended to demonstrate the type of work I do and the processes I performed.

Exploring Emergency Savings As An Employee Benefit

Project Introduction:

When congress passed the Secure Act 2.0 in 2023, it introduced Pension Linked Emergency Savings Accounts or “PLESAs” that are designed to function similarly to a 401(k) plan, but for emergency savings. These aim to incentivize participants to save for emergencies by leveraging employer match, high investment yields, and automatic payroll deduction.

Vanguard began exploring offering PLESA programs in 2024. To support this initiative, I was brought on as a Senior UX Researcher to gather feedback on how this program would be received by a wide range of end users.

Business Case: PLESA incentivizes 401(k) participants to keep more of their money at Vanguard. Customers who have more than one account at Vanguard have a higher likelihood of keeping their funds with the company, even after they change jobs.

Research Overview:

Objective:

To deliver quotes, trends and insights to business partners that will convey the potential impact of PLESAs and support in strategic decision making on whether/how to implement the proposed program.

Methodology:

1:1 interviews over teams

Supplemental Survey

Tools:

Figma/FigJam

Qualtrics

Participants:

Interviews: 6 of each from the following groups

HR professionals - Potential administrators of the program

401(k) plan participants - Potential users or recipients of the program

Financial advisors - Potential advocates of the program

Survey: n=535 401(k) participants

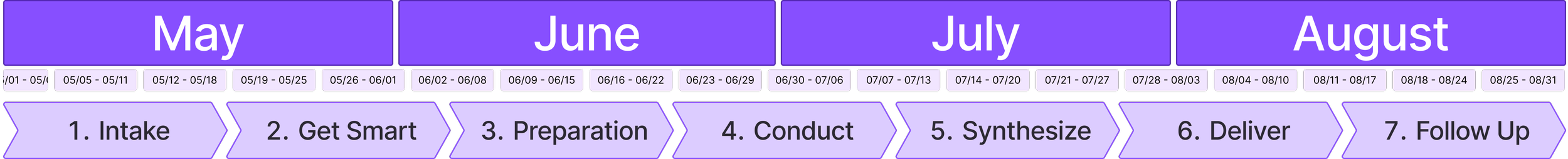

Timeline:

This study took place over 4 months. This is fairly long compared to my usual turn around time of about 1.5 months. In this situation, the study was unexpected, so the 4 months includes intake, concluding the prior study and a longer than usual get smart phase.

This study covered 3 interview rounds and a supplemental survey, each requiring it’s own recruitment, script, and synthesis, which also extended the timeline.

A broad stokes timeline is shown below, which also captures my typical 7 phase approach to planning, conducting, and delivering a study.

Phase 1: Intake

Background/Context:

In early 2024, I was assigned to the “Financial Wellness Journey” within Vanguard’s Workplace Solutions Department. We were responsible for anything related to financial education within the 401(k) space. We worked closely with the team that owned Vanguard’s high yield savings account product, “Cash Plus,” because it was seen as the primary vehicle for emergency savings (a key component of financial wellbeing.)

Meanwhile, congress had passed The Secure Act 2.0, which now allows for PLESA accounts (Pension Linked Emergency Savings Accounts.) The Cash Plus product was identified as the potential offering Vanguard would be repurposing to answer the call for PLESA accounts, should Vanguard decide to pursue it.

Therefore, the product manager responsible for Cash Plus needed support in the form of gathering user sentiment related to this opportunity.

Research Request:

In early May, the product owner for Cash Plus, who was familiar with my work from the Financial Wellness Journey, reached out to request a UX research study to explore the viability of the PLESA program.

At the time, I was in the middle of a usability test and had another study scheduled to begin in June. I brought this request to the financial wellness team, as well as my manager, who all gave the green light to deprioritize the upcoming study and focus on this, as it had a larger potential impact and could be seen as a dependency to move forward with the rest of the PLESA exploration.

To go through proper channels, we had the Cash Plus product owner fill out our Research Intake Request Form. An example of this is shown below.

Phase 2: Get Smart

SME Interviews:

Once the project was approved, my first step was to schedule 1:1 meetings with relevant subject matter experts from within Vanguard.

For example, I met with the team responsible for all things Secure Act 2.0, who shared that clients have been showing interest in the PLESA provision and asking about it during meetings.

Secondary Research/Competitive Analysis:

A lot of behavioral research has been done on the concept of emergency savings as a whole. A paper published by the National Bureau Of Economic Research was instrumental in influencing the inclusion of the PLESA provision in Secure Act 2.0.

One notable study demonstrated the potential impact of emergency savings accounts on low income groups. It observed that workers who are paid into multiple accounts (rather than one account) are more likely to withdraw less during an emergency, and therefore save more in the long run.

Competitors like Fidelity were frontrunners in the PLESA space. They got a lot of positive publicity around a program they piloted with Delta Airlines in which participants could receive up to $1,000 in employer contributions to an emergency savings account if the employee met certain criteria. This led the way in Vanguard’s understanding of what successful PLESA programs could look like.

Research Plan:

Once I felt confident in my understanding of the problem space surrounding PLESA, I began developing the research plan. I scheduled a small meeting for those closest to the project (the Financial Wellness product owner and the owner of the Cash Plus product.) I walked them through a template I created to flesh out the research plan:

Most importantly, we identified the following three research objectives

To gather first impressions from participants around the PLESA program.

To identify concerns that would need to be addressed.

To target specific questions like - “What is the optimal recommended employer contribution to incentivize participation?”

Other important topics we covered were:

Hypotheses and assumptions

Target participants and recruitment capabilities

Timeline and expected deliverables

Methodology and testing materials

Below is an recreation of what the research plan looked like (FigJam)

Phase 3: Prep

Stakeholder Engagement:

Since this project covered such a wide range of users and business lines, there were a lot of stakeholders to get buy in from. For anyone who wasn’t involved in the research planning phase, I scheduled a separate, one-on-one meeting with them to discuss their personal curiosities. I find this extremely important for achieving the following goals:

Get the stakeholder personally invested in the results of the study.

Gain valuable knowledge they might have about the project.

Get their support when dealing with roadblocks in the future (i.e., recruitment issues.)

In this stage, I might ask a stakeholder questions like:

If you could ask our users any question, what question would it be?

How do you think users will answer when we ask them that question?

What type of information would help you perform your job if you had it?

Examples of stakeholders I met with include:

Tech teammates aligned to the Financial Wellness section of the participant site.

Relationship Managers responsible for communicating with HR professionals.

Business leaders who control the budget needed to pursue a program like this.

Stakeholders were often asked to add to the following Research Objectives template to document the topics they were most interested in generating discovery around.

Participant Recruitment:

Since this study covered three different user groups, each group was recruited differently.

HR Professionals: I leveraged the “Vanguard Research Community” in which plan sponsors agree in advance to be called upon to deliver feedback about new products. Although the community exists, it is still extremely hard to recruit from because these users are so busy and we can’t legally financially incentivize them. Basically, we send out the recruitment email and whoever agrees to meet, that’s who we interview. Luckily, we hit 6 which is was my goal number.

401(k) Plan Participants: Contrary to the HR professionals, Vanguard’s community of employee participants numbers in the thousands and we are able to financially incentivize them. So when we sent out the recruitment email, hundreds of people responded. I was able to create an ideal sample in which demographics like age, income, race, and gender were all effectively balanced.

Consultants: Even worse than HR professionals, Consultant essentially will never answer a generic recruitment email. For this population, we had to do grass roots recruitment. Our strategy was to go to Vanguard crew members who interface with consultants regularly. I instructed them to broach the topic in an upcoming meeting and then CC me on a follow up email. From there, I was able to schedule the interview.

The interviews were scheduled across about a month and a half. Each group took about a week to conduct with a week in between to prep for the upcoming sessions. We conducted them in the order of recruitment ease (Participants > HR Professionals > Consultants) to allow for hiccups when recruiting from more difficult populations.

When preparing for this study, I referenced the personas that we have available at Vanguard, which look something like what is shown here.

Script Development

Once the recruitment strategy was ready and moving forward, I was ready to begin on the scripts. Each group needed their own script, but they followed a similar formula.

Questions like “what are your initial impressions of emergency savings as an employee benefit” could be asked of all three groups and the participants will almost certainly answer from the perspective of the role they are filling at the moment. (I.e., an HR professional will rarely answer that question from perspective of a 401k participant themself.)

Part of my philosophy when doing open ended discovery like this is to lead the participant as little as possible. Because of that, my scripts are often very sparse (5-10 questions max.) Therefore, the primary interview skill becomes improvising and asking follow up questions in the moment based on the direction the participant decides to take.

Phase 4: Conduct

Watch Parties:

In this study (and for pretty much every study I do that involves interviews) I coordinate “Watch Parties.” A watch party is when I interview a participant in one teams meeting and stream that video to another teams meeting, in which anyone interested can attend.

The power behind the watch party is that the participant feels like it is an intimate conversation, while stakeholders are still able to listen in real time. This adheres to our consent form because participants are made aware that the sessions are being recorded and could be shared internally.

Additionally, since the stakeholders are all in another meeting, they are free to use the chat as much as they want to discuss what is happening during the interview or ask follow up questions. In this study, the chat would frequently be quite engaged, with stakeholders asking follow up questions or discussing interesting moments from the interview.

The top example of this was when a participant explained her experience struggling to save for emergencies. She keeps her emergency funds in a savings account at her bank and believes that the level of accessibility is actually a problem for her. She felt confident that hosting her emergency account at Vanguard could really help her practice the financial discipline she often struggles with. This story was very touching to the team.

The Watch Party Method:

Sharing Findings:

Although these findings were intended to roll up into a final report, the breadth of this study meant that it would be a long time between the first interview and the research readout. Due to this, we established a cadence of sharing “initial findings” directly after each round of interviews. This was usually in the form of a teams chat and was very informal. I would note down the most impactful takeaways so stakeholders could review and discuss if needed.

Phase 5: Synthesize

Note Taking:

I use a FigJam based note taking method that ends up looking something like what is shown below. When you select a sticky and click “Command + Return,” it starts a new sticky directly to the right. This allows me to take notes quickly in which each sticky is a separate thought.

In this study, I have 3 groups of 6. At that scale, I used color to differentiate user group. In the hypothetical example below, color represents role - green is for sales, blue is for receptionists, and red is for managers. This allows for easily seeing grouping once affinitized.

I will also include a picture of the participant so I can easily recall them, as well as any pertinent information. For example, the size of their company or the consulting firm they work for.

Affinity Mapping:

After transcribing all the notes and quotes, I began to categorize them based on subtheme, which then ladders up to a greater them.

“Subthemes” could be a simple idea like “research participants observe a growing trend among companies to place a higher priority on their employee’s holistic financial wellness.” Whereas “themes” are more akin to categories like “employer disposition.”

As you can see, some themes are repeated by multiple participants where others might only be mentioned once. This does not imply greater importance, but simply how top of mind the topic is to participants.

Lastly, as demonstrated below, the color coding method allows for quick differentiation by persona. In this hypothetical scenario, Theme 3 Subtheme 1 is agreed upon by all participants, where Theme 2, Subtheme 1 was only ever mentioned by managers.

An example of this in practice would be how HR Professionals might mention the company’s ability to financially incentivize participants, and participants might mention the way a financial incentive might drive them to engage with the program. These would be separate Subthemes that then ladder to a overarching theme of “Incentives.”

Phase 6: Deliver

Presentation Deck:

The first deliverable I began work on is the Presentation Deck, which is differentiated from a "report.” In this deck, I prioritize findings based on the most impactful way to share them when presenting to a group.

For example, in this slide I only have a title and a quote. This would be a jumping off point for me to discuss the topic being addressed in detail without overwhelming the listener with too many words on the screen.

The reason I chose to do this first was because I felt pushed to deliver as quickly as possible due to some upcoming budget planning discussions. Additionally, I prefer to start with the Presentation Deck, because it is easier for me to get my thoughts in order when I imagine telling them to someone like I would in a story or presentation.

Report Library Deck:

Equally important to the Presentation Deck though is the Report Library Deck. In this deck, I outline all of the findings in great detail. The audience for this is expected to be reading the content without me present, which requires much more detail.

This deck will also reference the exact data source, primarily for use by other researchers who want to follow up or expand upon the research. This is especially relevant now because I have left the Financial Wellness journey - so another researcher would be responsible for this project going forward.

In practice, this is the less impactful deck in my opinion. When I work with stakeholders, they typically want only a few powerful quotes or ideas that they can walk away with. The report library deck ends up going too far into detail, but is still necessary for full delivery.

For example - one HR Professional volunteered his company to “pilot” the program. Sometimes this fact was the only thing stakeholders would take away from the deck. This can be disheartening, but at least they’re walking away with something, right?

One Pagers:

Lastly is the one pager(s) - This is typically a favorite deliverable of mine because it combines the best of the previous decks into one.

In the PLESA study, I made many different one pagers. I made a short description of each round of research (HR, Participant, and Consultant) but these were quick and simple.

Once the project was completed, I put two more one pagers together.

One for use internally - to be shared with other stakeholders and business leaders.

One for use externally - to be shared with the consultants who participated in the study. This is an important part of our practice because consultants see part of their “compensation” for participating to be receiving the findings of the research.

This was a delicate balancing act though, because we did not want to advertise to consultants that Vanguard definitely is moving forward with PLESA (spoiler) so the language in this one pager needed to be reviewed by legal and content multiple times.

Phase 7: Follow Up

Supplemental Survey

After delivering the various reports, the project lead who sponsored the study in the first place realized she also wanted quantitative data to back up the findings.

This covered a wide range of topics like:

Validation of the finding that 401(k) participants would take advantage of the program.

Specifics on the dollar value the incentive would be needed to see participation.

Deeper dive into the effects of impact on participation rate.

One of the most interesting takeaways from the part of the study was that high income 401(k) participants reported being more likely than low income participants to take advantage of the program. Our theory is that high income 401(k) participants are more capable of savings and more aware of the need for emergency savings.

Continued Partnership

Once all of the reports were delivered, I continued to work with the project lead for quite a while - participating in business and finance conversations as the representative for the end user in the room.

Conclusion

Where is this project now?

Although we saw a lot of interest from participants, PLESA accounts have still not been implemented by Vanguard.

The primary reason is that there is no confidence around whether participants would simply contribute funds, receive an employer match, and then leave with the money as soon as they have access to it.

The research somewhat would suggest otherwise, with many individuals saying they would feel driven to use Vanguard’s high-yield savings account offering if it was sponsored and somewhat contributed to by their employer.

Still, the uncertainty is what held up the accounts from being created. Perhaps as the program becomes more popular amongst competitors like Fidelity, Vanguard will finally pick up the mantle.

What could have been done differently?

Overall I think this project went very well. I wish I was able to continue working on it and driving it forward in the business. Unfortunately, I was assigned to a new project shortly after the conclusion of the study, and have only be able to keep up with it as a passion project.

Additionally, I think we could have gotten to the heart of the financial considerations earlier. The early stakeholder conversations did not elicit the need to prove the demand for keeping funds within the account after contributing. If this roadblock would have been identified earlier, the research could have more directly addressed it.

Lastly, I would have loved to do a testing environment, in which we would have simulated a situation where 401(k) participants are signing up for and contributing to a theoretical account. I think that having stimuli would have provided some interesting, additional context, but the design resources just weren’t available at the time.

Want to learn more?

Thanks for making it this far! I love talking about this project, as well as my passion for User Experience Research as a whole. I’ve only scratched the surface of this topic, so if you’d like to hear more about Vanguard’s exploration into PLESA, or discuss professional opportunities, please contact me via the information below!